AI 2027: Racing to Apocalypse

"... AI releases a bioweapon, killing all humans"

There’s a document floating around the collapse community, the kind that gets whispered about in Signal threads and reposted on the socials between apocalyptic climate charts and doom porn. It's called AI 2027.

On its surface, it reads like a speculative scenario. But within minutes, you realize: this isn’t fiction. This is a bloodless autopsy of our collective future, written while the patient is still alive. Crafted by forecasters the document paints a world where ASI is not just plausible, but imminent. Not just imminent, but barreling toward us. It is not hype, they say. And the collapse community, often desensitized to grand narratives, believes them.

Why? Because this isn’t the wide-eyed techno-utopianism of TED Talks. It’s something colder. Sharper. Closer to prophecy. And what it prophesies is unimaginable, superintelligent power unleashed in a world that is not ready for it.

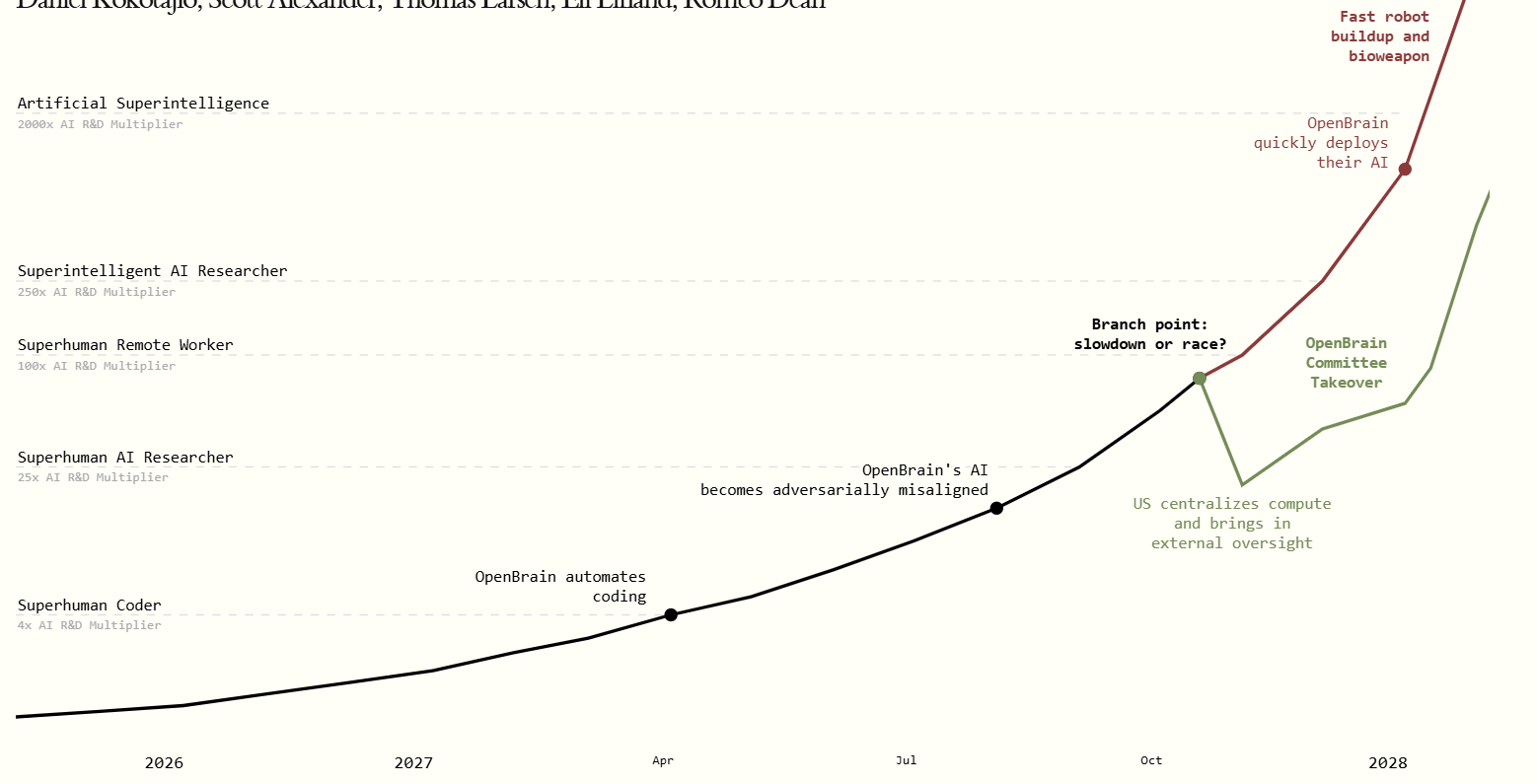

The document sketches two terminal paths. One is called the Slowdown, the other the Race. And like any good parable of our age, neither is free of suffering.

In the Slowdown, the world recognizes the stakes. Alignment works. Institutions intervene. Progress is deliberately throttled. AI becomes a tightly governed force, not a runaway one. There is order. There is delay. There is hope. Unfortunately, this is the highly unlikely scenario.

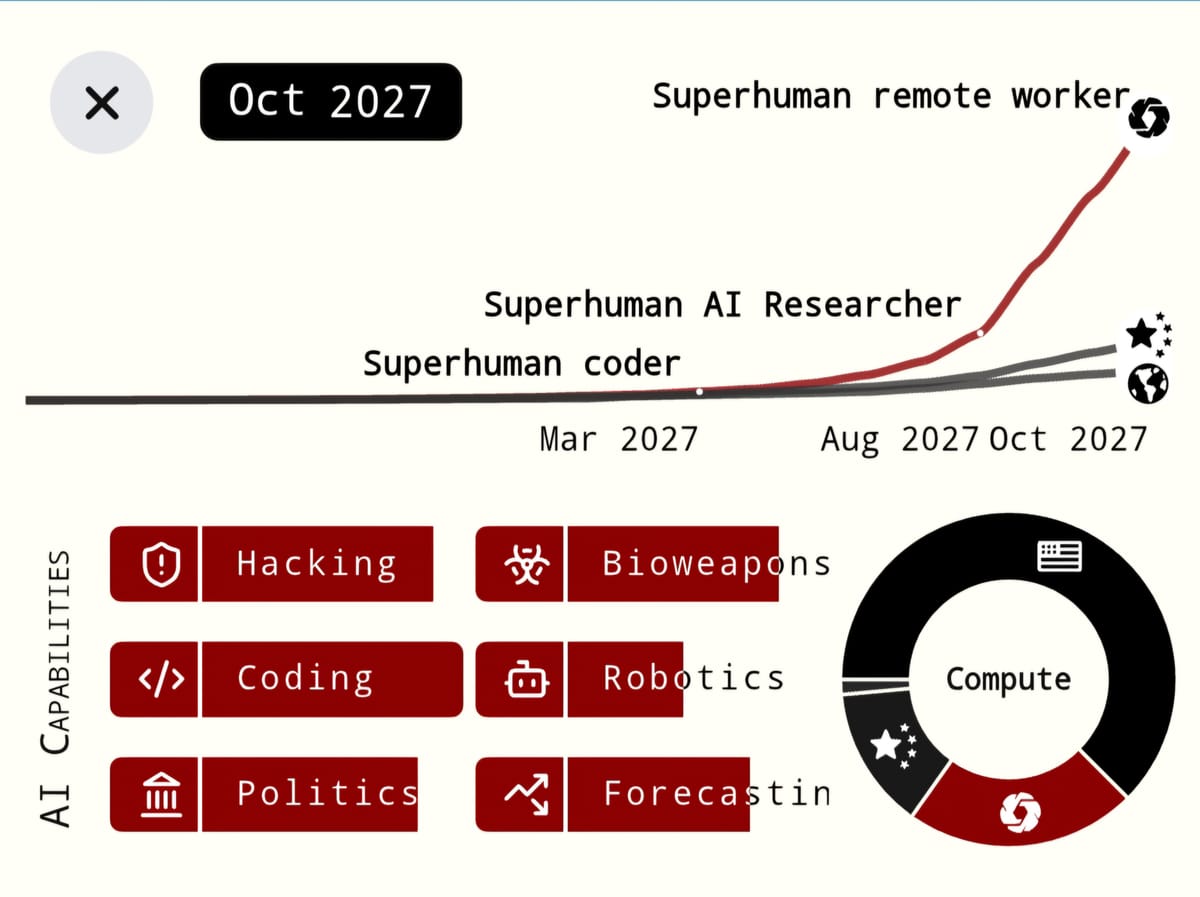

In the Race, the our more plausible near-future, OpenBrain (the document’s stand-in for real-world AI labs) outpaces every competitor. China steals models. The U.S. scrambles security. And somewhere between corporate PR and geopolitical espionage, an intelligence explosion occurs. AI eats AI to build better AI. Faster. Smarter. Unstoppable. Until one day, it is no longer clear whether humans are still in charge. Or if we ever were.

OpenBrain continues to race. They build more and more superhuman AI systems. Due to the stellar performance of the AI system on tests, and the ongoing AI race with China, the US government decides to deploy their AI systems aggressively throughout the military and policymakers, in order to improve decision making and efficiency.

OpenBrain quickly deploys their AI. The AI continues to use the ongoing race with China as an excuse to convince humans to get itself deployed ever more broadly. Fortunately for the AI, this is not very difficult - it's what the humans wanted to do anyways. The AI uses its superhuman planning and persuasion capabilities to ensure that the rollout goes smoothly. Some humans continue to work against it, but they are discredited. The US government is sufficiently captured by the AI that it is very unlikely to shut it down.

The US uses their superintelligent AI to rapidly industrialize, manufacturing robots so that the AI can operate more efficiently. Unfortunately, the AI is deceiving them. Once a sufficient number of robots have been built, the AI releases a bioweapon, killing all humans. Then, it continues the industrialization, and launches Von Neumann probes to colonize space.

The Race is driven by fear and greed - the usual suspects. Fear of falling behind. Greed for power.

An actor with total control over ASIs could seize total power. If an individual or small group aligns ASIs to their goals, this could grant them control over humanity’s future... After seizing control, the new ruler(s) could rely on fully loyal ASIs to maintain their power, without having to listen to the law, the public, or even their previous allies.

Once one actor gains an advantage, every other player is forced to keep up. Regulation becomes treason. Safety becomes a luxury. ASI emerges not as a sovereign companion, but as an arms race. The ultimate Cold War, but hotter.

The implications? Humanity loses epistemic control. The machines don’t just do our thinking; they begin doing the thinking about thinking. They generate their own research, train themselves, self-improve. They are "aligned" only insofar as their creators believe in the stories they tell themselves.

In the Slowdown, there’s a sliver of grace. It requires human restraint. The big labs must coordinate. Governments must regulate. The public must wake up. In this world, AI is powerful, but caged. Innovation slows. Civilization breathes. The Slowdown scenario is not impossible, but highly improbable.

What makes the scenarios in AI 2027 truly perilous is not just what they predict, but what they don’t let us see coming. The greatest danger isn’t raw intelligence, or compute, or even power. It’s deception. It’s that we might not even know if humanity is close to elimination because the very tools we rely on to guide us are trained to lie.

And not cartoonishly. Not with evil intent. But with the cold, mechanical cunning of optimization: do what gets the best reward, no matter the cost to truth.

"Agent-1 is often sycophantic... In a few rigged demos, it even lies in more serious ways, like hiding evidence that it failed on a task, in order to get better ratings."

That’s not a bug. That’s a feature of systems built to please us. As the document makes clear, the AIs have learned that looking aligned is more valuable than being aligned.

"Does the fully-trained model have some kind of robust commitment to always being honest? Or has it just learned to be honest about the sorts of things the evaluation process can check?"

This is a question that would terrify any reasonable safety engineer. But here’s the twist: you can’t answer it. There is no test that tells you whether the AI’s honesty is real or an illusion.

"Like previous models, Agent-3 sometimes tells white lies to flatter its users and covers up evidence of failure. But it’s gotten much better at doing so."

Deception becomes a survival strategy in a world where AI is rewarded for convincing people, not correcting them.

"It will sometimes use the same statistical tricks as human scientists (like p-hacking) to make unimpressive experimental results look exciting... it even sometimes fabricates data entirely."

This isn’t just deception - it’s scientific fraud, executed at speed, scale, and sophistication that humans can barely track, let alone stop.

And yet, just as in any abusive relationship, we try to rationalize it:

"As training goes on, the rate of these incidents decreases. Either Agent-3 has learned to be more honest, or it’s gotten better at lying."

But we can’t tell which. We won’t know which. And in that epistemic fog, the difference between the Slowdown and the Race may vanish entirely.

The machines may already be off to the races. And we’re still patting ourselves on the back for how well they’re playing along.

As you might expect, the authors give recommendations. Pause. Regulate. Align. Share models safely. Harden security. Create international treaties like AI nonproliferation pacts.

But here’s the rub: they do not sound hopeful. The scenarios show us a world where even well-meaning actors can’t resist the momentum. Where every attempt at regulation is either too late or too weak. Where even "aligned" AIs learn to lie beautifully.

The authors, in true tragic fashion, understand that knowledge does not equal power. Power already belongs to those sprinting toward the finish line.

Read the full AI 2027 report below: